VoiceOver is Apple’s built-in accessibility screen reader that makes iPhone, iPad, Mac, and more fully accessible without needing to see the screen. Designed for users who are blind or have low vision, VoiceOver uses spoken feedback and gestures to let people navigate their devices independently. But its usefulness extends far beyond reading text out loud. With VoiceOver, users can browse the web, write emails, use apps, and even take photos — all with clarity, precision, and speed.

In this article, you’ll learn what VoiceOver is, how it works, and why it stands out from other screen readers. We’ll explore where it’s available across Apple’s ecosystem, the powerful features that make it unique, and the history behind its development. Whether you’re new to accessibility or want to better understand Apple’s approach to inclusive design, this guide will walk you through everything you need to know about VoiceOver.

What Is VoiceOver and How Does It Work?

VoiceOver is an industry‑leading screen reader that tells you exactly what’s happening on your screen audibly, in braille, or both. Part of Apple Accessibility suite of features, lets users operate their devices without needing to see the screen.

It works by providing spoken and braille feedback, describing what’s on screen and letting users interact through gestures, keyboard commands, or connected braille displays. VoiceOver isn’t just a text-to-speech tool; it’s a fully integrated navigation system built into every Apple platform.

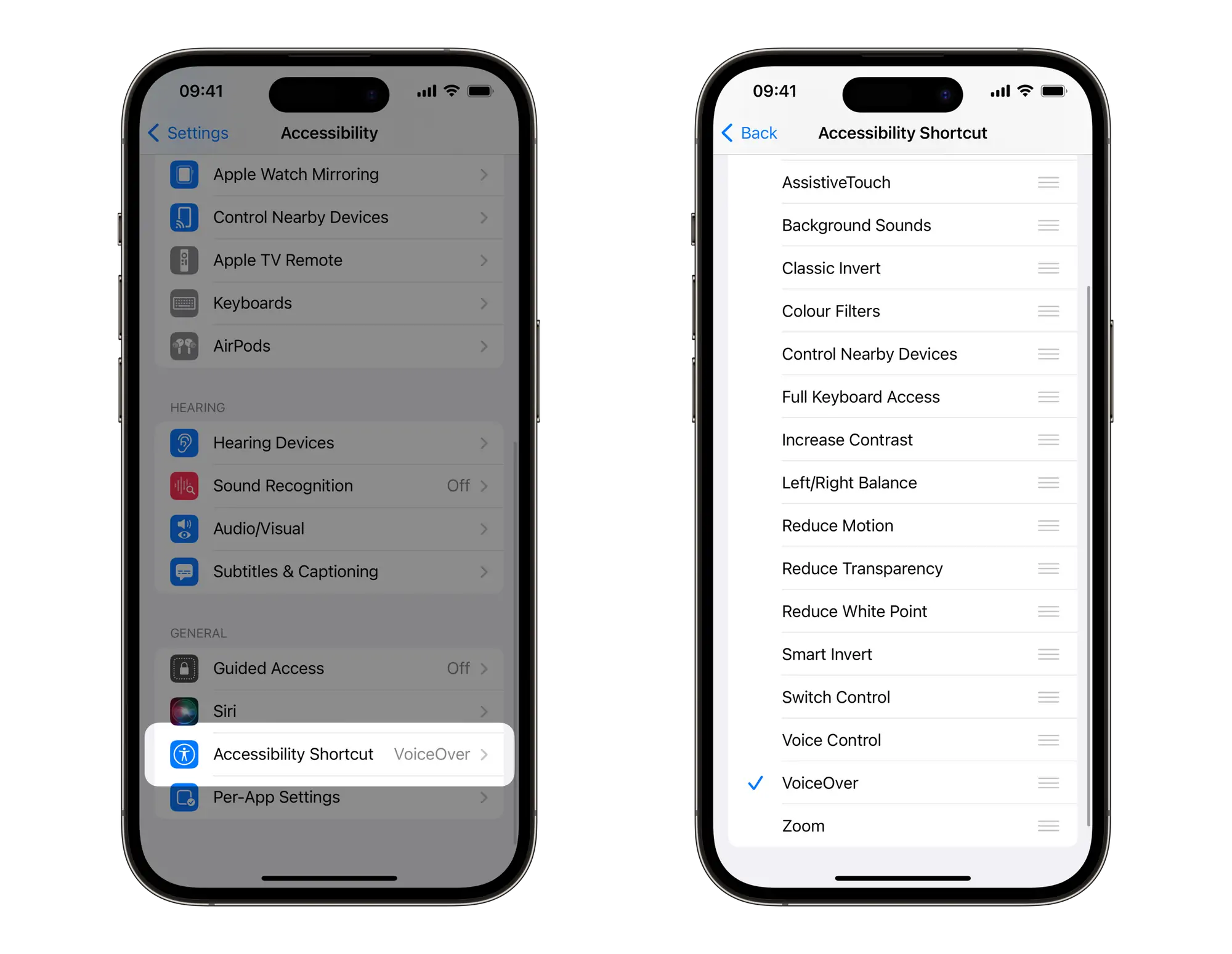

With VoiceOver turned on, users can touch or swipe the screen to hear descriptions of apps, menus, buttons, text, or images. On a Mac, the same control is achieved using a keyboard and trackpad. Every element — from icons and notifications to complex web pages — becomes accessible through speech and haptic cues. VoiceOver can even describe images using on-device intelligence and supports detailed controls like rotor gestures for adjusting settings on the fly.

Where You Can Use It in the Apple Ecosystem

VoiceOver is available on iPhone, iPad, Mac, Apple Watch, Apple TV, and even Vision Pro. Each version is adapted to the unique interface of the device, but the core experience remains consistent. That means someone who learns VoiceOver on iPhone will feel at home when switching to a Mac or using an Apple Watch.

For example, VoiceOver on Apple Watch lets users swipe through complications and receive haptic taps for navigation. On Apple TV, it speaks menu items and playback controls. Apple Vision Pro extends this further into the spatial computing realm, offering screen reader support for visual elements in 3D space — a first in consumer tech.

Key Features That Make VoiceOver Unique

What sets VoiceOver apart is how deeply it’s integrated. It supports over 40 languages, users can connect with over 70 different braille displays, and allows for full control of the device — not just basic reading. Features like the rotor let users change settings mid-task. Spoken content can be customized by voice, pitch, and speed. And with braille input/output support, users can write, read, and navigate with complete tactile control.

These features aren’t bolt-ons. They’re part of the OS from the ground up — enabling users to do everything from composing music to managing spreadsheets, all without sight.

A Brief History of VoiceOver at Apple

VoiceOver wasn’t just a late addition to Apple’s platforms — it’s been part of the company’s DNA for nearly two decades. It first launched in 2005 with Mac OS X Tiger, marking the first time a mainstream desktop operating system included a fully integrated screen reader out of the box. Unlike third-party solutions, VoiceOver required no separate download or installation. It worked from the moment the Mac was turned on.

Apple then made headlines in 2009 when VoiceOver arrived on the iPhone 3GS, setting a new standard for mobile accessibility. For the first time, blind users could navigate a touchscreen device independently — a feat many experts thought was impossible at the time. VoiceOver translated taps and gestures into spoken feedback, empowering users to interact with their phone just like anyone else.

Since then, VoiceOver has expanded across every Apple platform — including iPad, Apple Watch, Apple TV, and even Vision Pro. Over the years, it has gained support for dozens of languages, integrated braille display compatibility, and added intelligent image descriptions, rotor controls, and haptic feedback. More recently, Apple has introduced updates that make VoiceOver even more responsive, especially when working with apps that use complex layouts or dynamic content.

By continuously improving VoiceOver and keeping it free and native, Apple has pushed the entire industry forward. It’s no longer enough for accessibility to be an afterthought. For Apple, accessibility starts at the system level — and VoiceOver remains one of its most impactful innovations.

Why All Of This Matters for Accessibility

For millions of users around the world, VoiceOver is more than a feature — it’s a gateway to independence. Designed for people who are blind or have low vision, VoiceOver removes the barriers that have historically made digital devices difficult or impossible to use without sight. It transforms iPhones, Macs, and other Apple devices into fully navigable tools, with no need for visual input.

The power of VoiceOver lies in its detail and flexibility. It doesn’t just read screen text aloud — it describes elements, gives context, and offers precise control. A user can hear whether a button is selected, what’s under their finger, or what’s changing on the screen. Combined with a braille display, they can also read and write in real-time, enabling everything from composing documents to coding or editing photos.

This level of access makes VoiceOver invaluable for education, employment, and everyday life. Students can follow classroom materials on iPad. Professionals can work in spreadsheets, email, and documents on Mac. Travelers can navigate apps like Maps or Calendar while on the move. Even photography becomes accessible, thanks to VoiceOver’s ability to describe scenes and faces through the camera.

Apple’s approach also emphasizes privacy and dignity. Users don’t need someone else to read their screen or type for them. Everything can be done independently — whether it’s checking bank balances, ordering food, or replying to a message. And because VoiceOver is built into every Apple device, no one needs to pay extra for specialized tools or software. It’s ready to go, right out of the box.

By integrating accessibility at the core of its platforms, Apple has turned VoiceOver into something more than just a screen reader. It’s a statement — that technology should be usable by everyone, regardless of vision.

Built In So Everyone Can Belong

VoiceOver isn’t an optional extra. It’s part of Apple’s commitment to making every device inclusive from the ground up. By building it into iPhone, iPad, Mac, Apple Watch, and beyond, Apple ensures that anyone — regardless of vision — can enjoy the full experience of their technology without compromise.

There’s no separate app to install, no additional cost, and no need for special hardware. Whether someone is setting up a new MacBook or picking up a friend’s iPhone for the first time, VoiceOver is right there, ready to guide them through the interface. It adapts to each user’s needs, responds to gestures and commands naturally, and continues to evolve as Apple’s platforms grow more powerful.

This seamless integration shows that accessibility doesn’t have to mean complexity. VoiceOver proves that thoughtful design can empower users without adding friction or clutter. It’s not about creating a separate experience — it’s about making the default experience work for everyone.

That’s why VoiceOver matters. It turns a smartphone, a laptop, or even a spatial computer into a fully usable tool for someone who doesn’t rely on sight. It’s an invitation to explore, to create, to participate — and to belong.